About Me

Hi, I'm Richard Walls. I'm a Content Designer who transforms complex enterprise systems into intuitive user experiences. My journey began deep in the technical trenches, documenting intricate application server integrations and system architectures. This foundation gave me something unique: the ability to truly understand the complexity users face and design content that bridges that gap.

Over 7+ years, I've evolved from explaining how systems work to designing how users experience them. I specialize in information architecture optimization and user-centered content strategies that drive engagement across enterprise AI software. My technical communication background, combined with practical application of privacy and cybersecurity principles, enables me to create secure, ethical, and human-centric solutions.

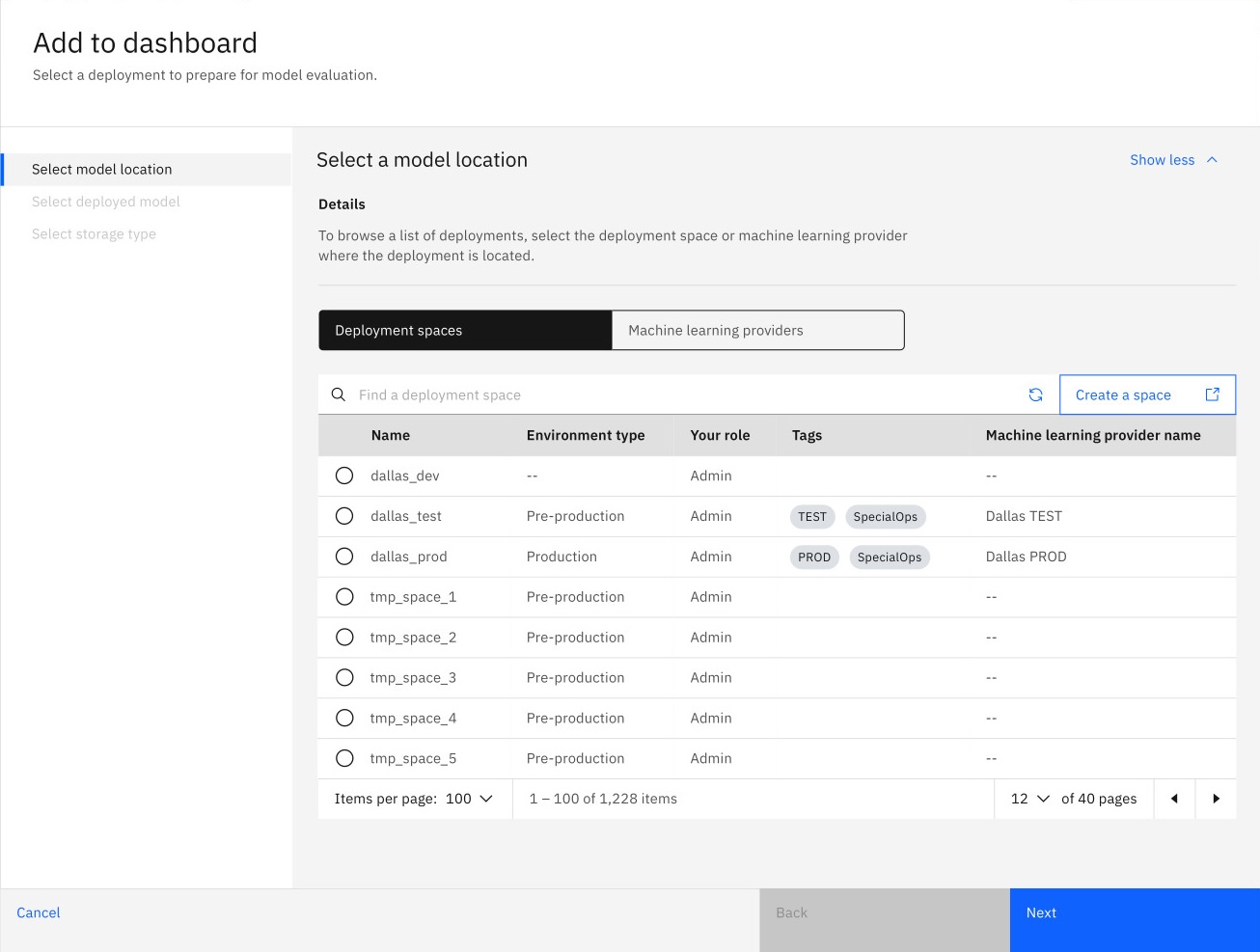

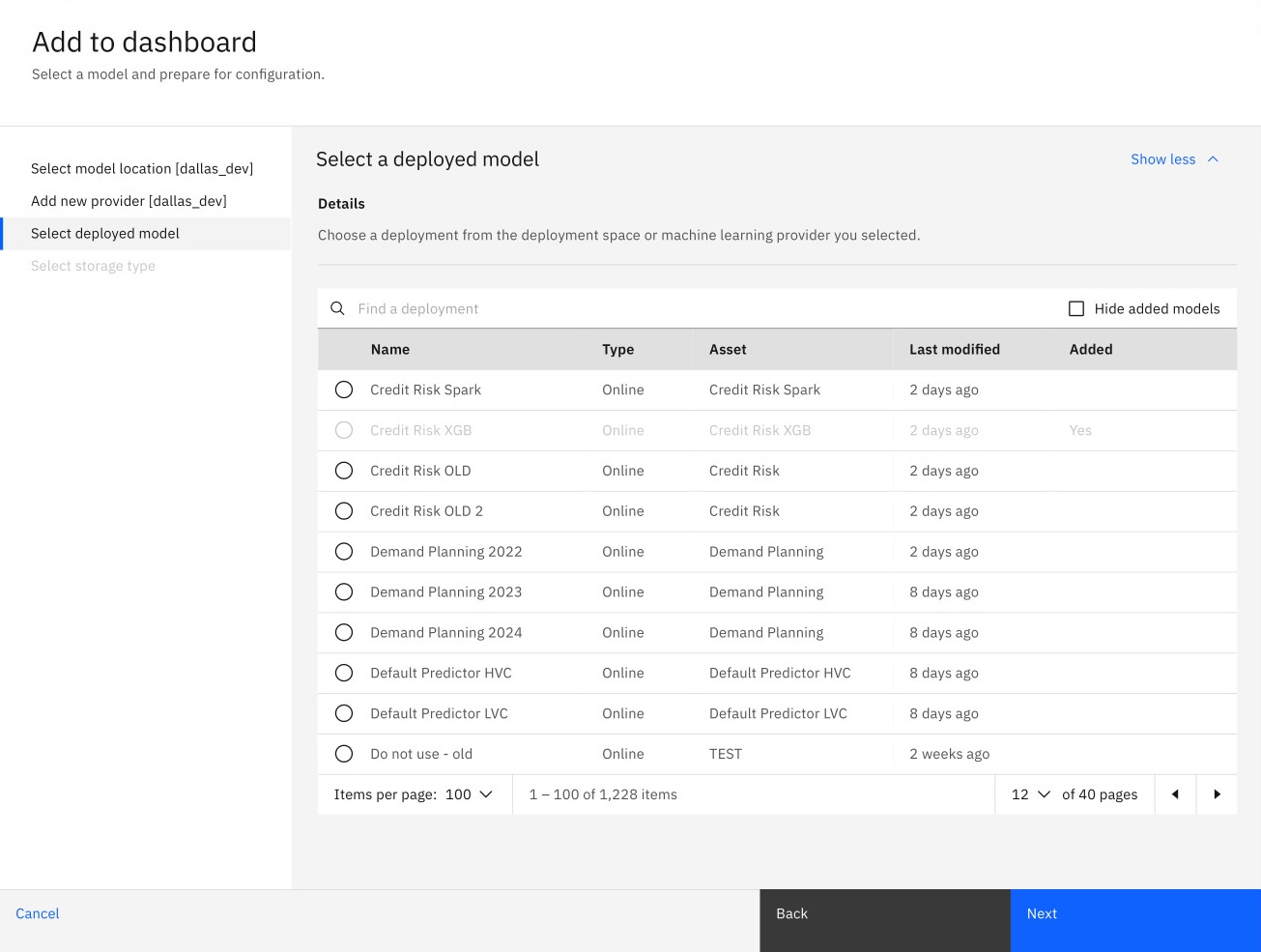

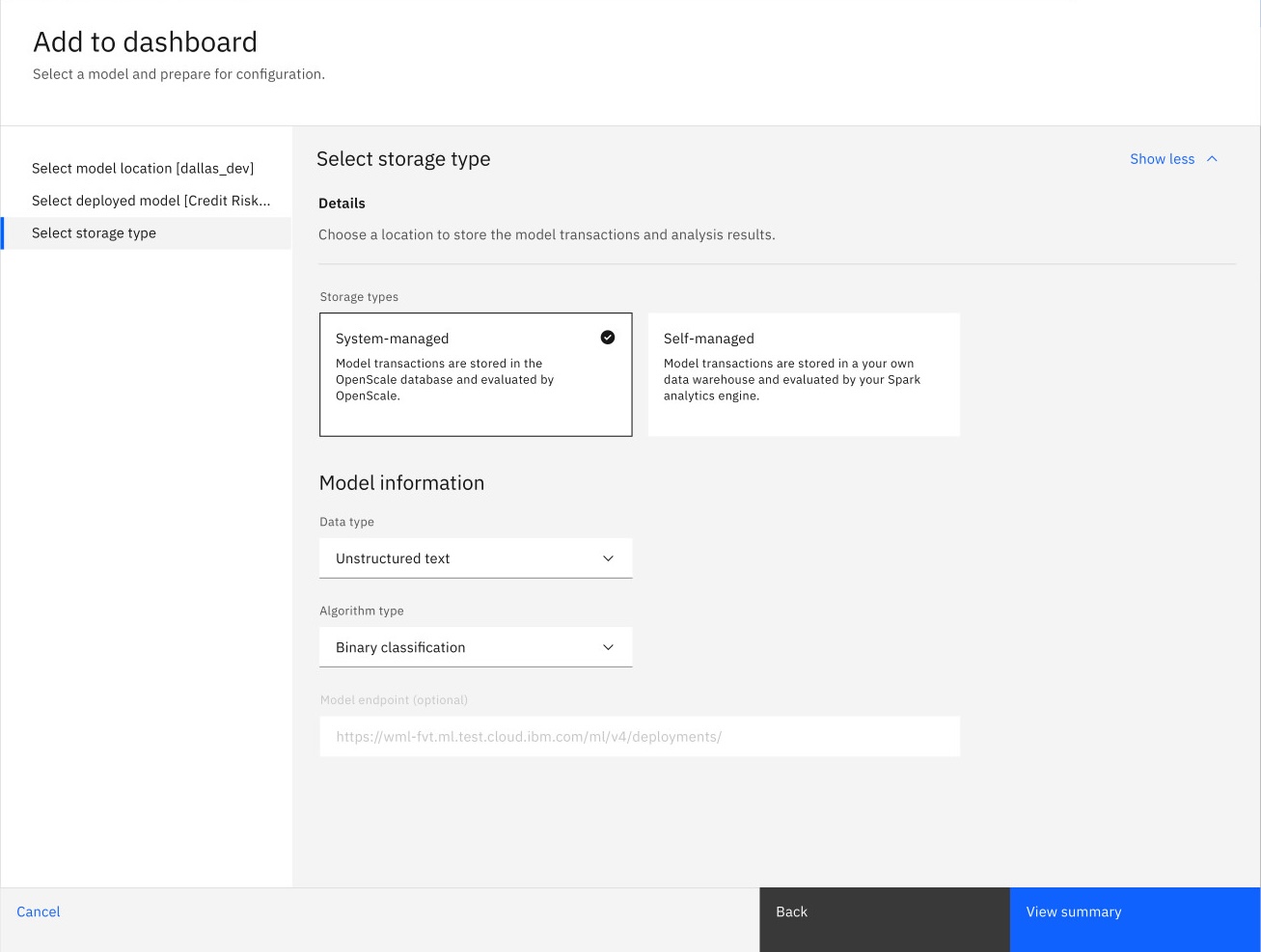

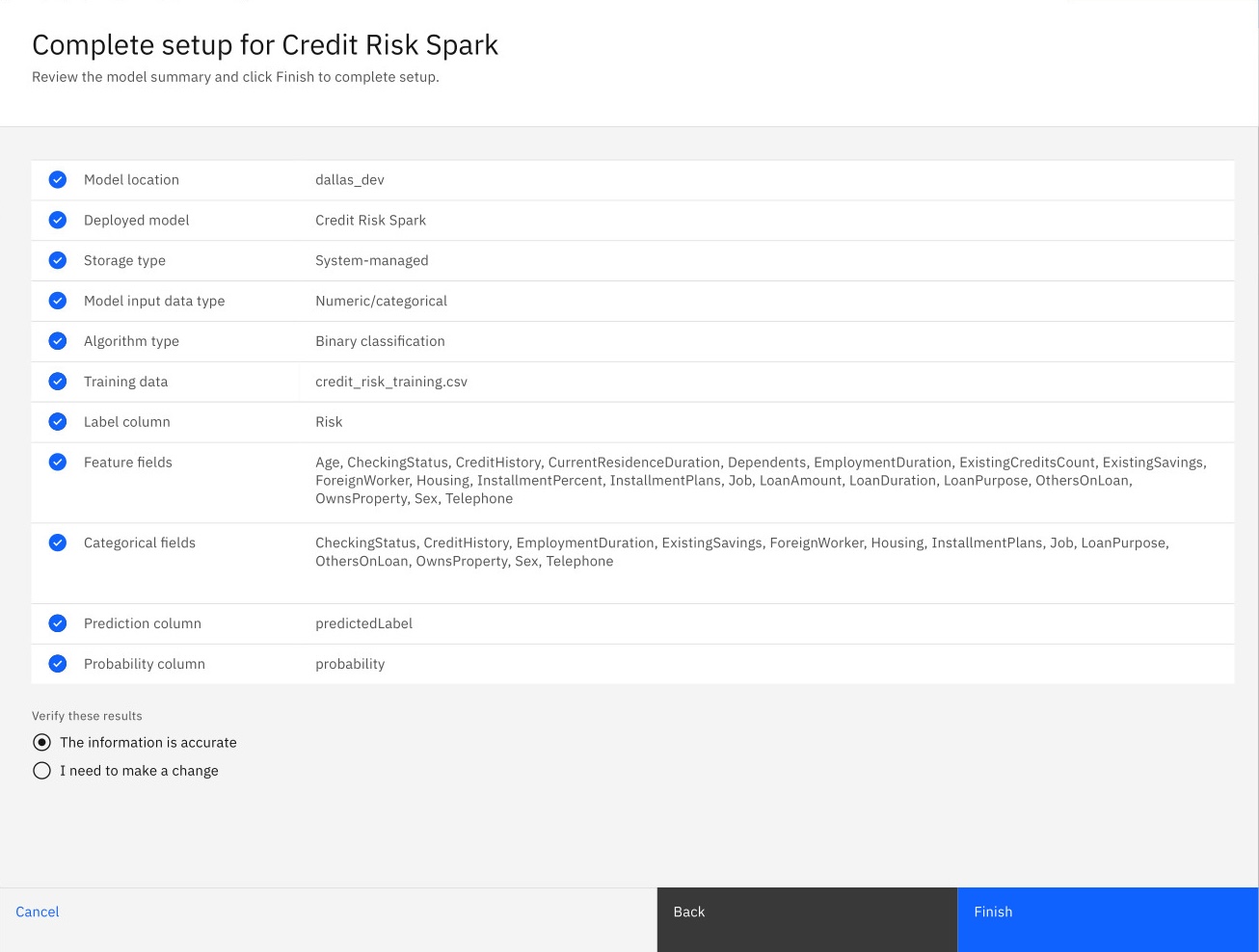

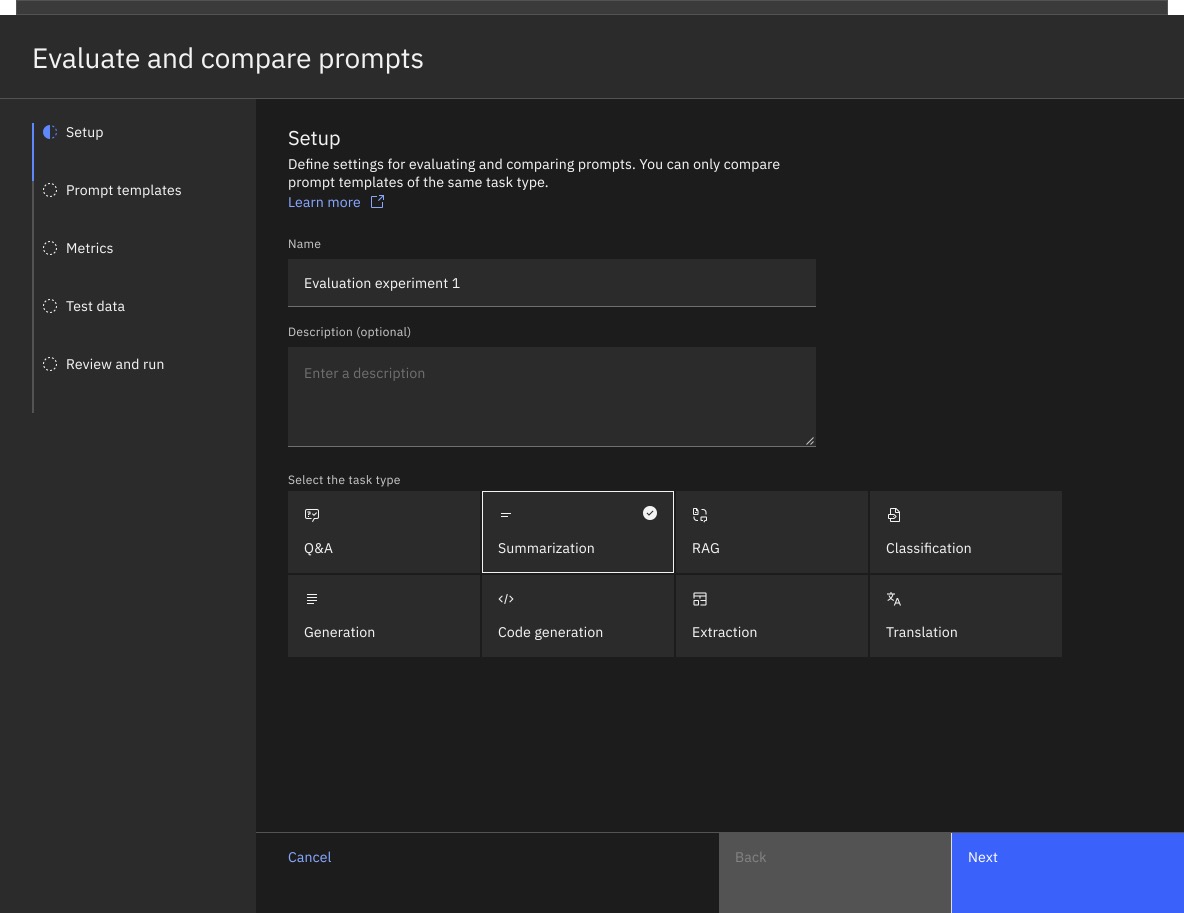

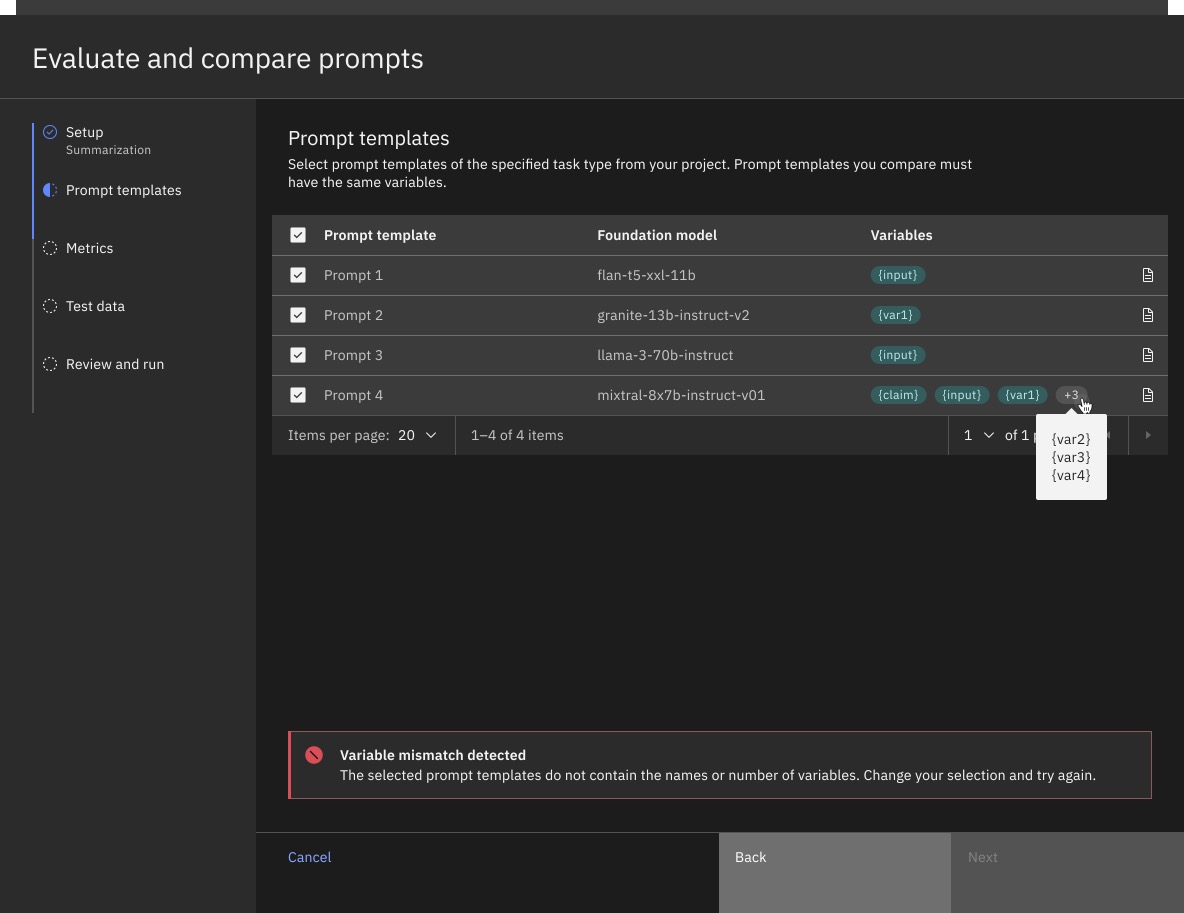

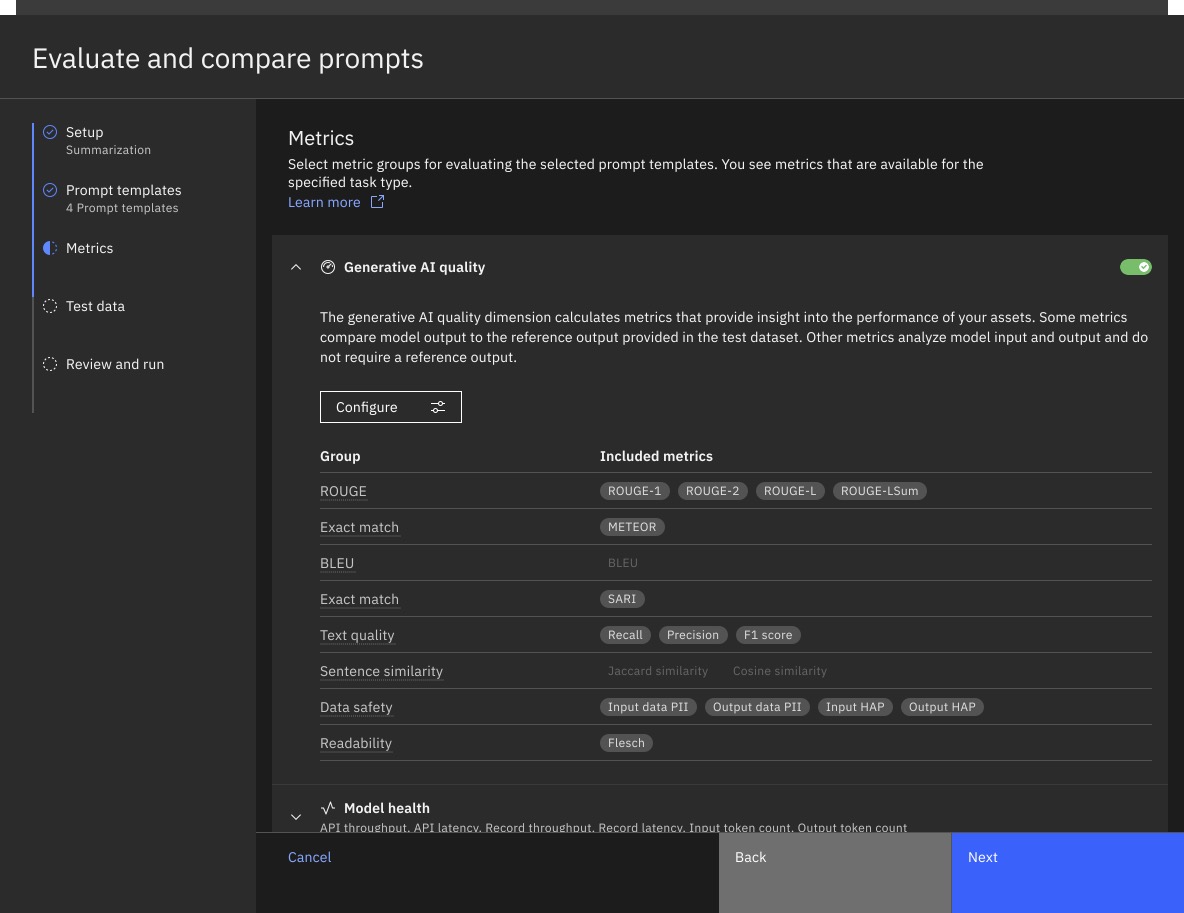

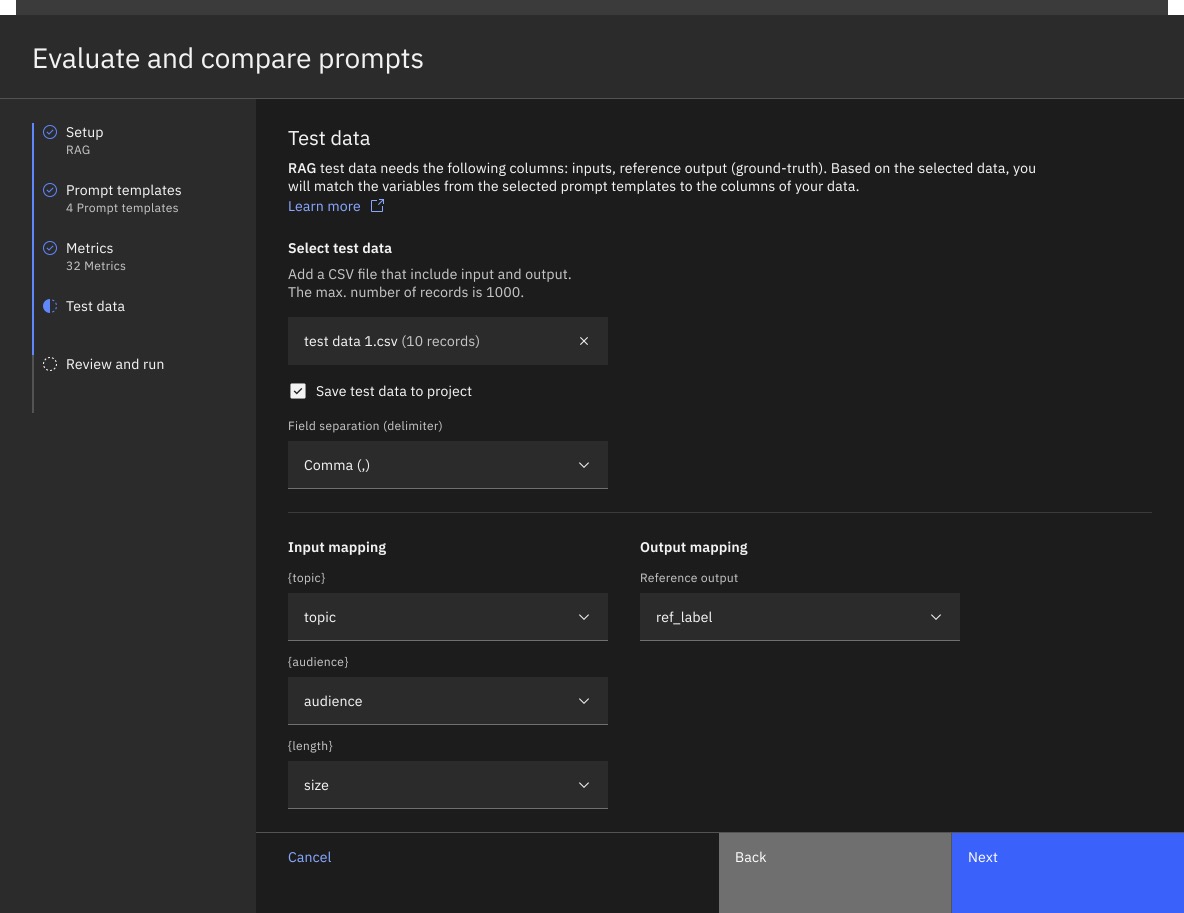

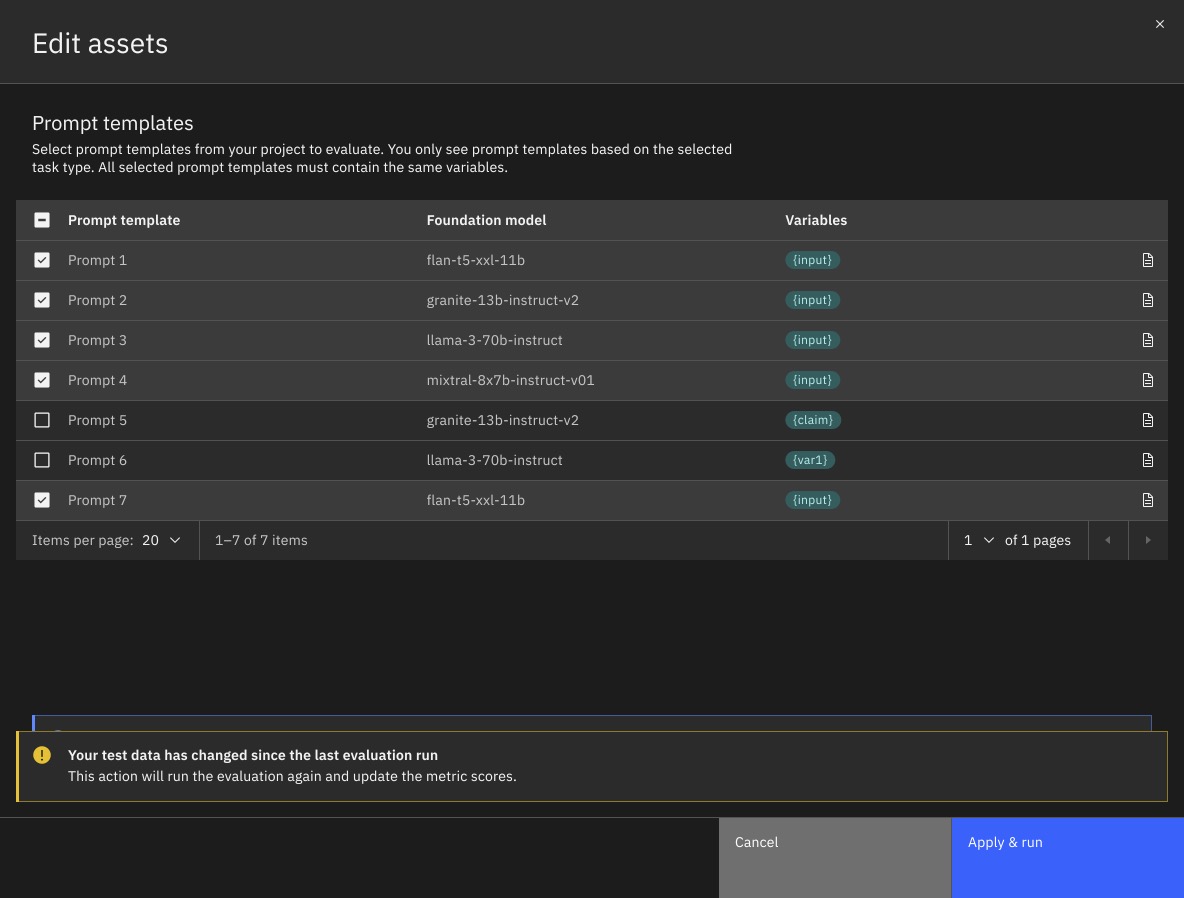

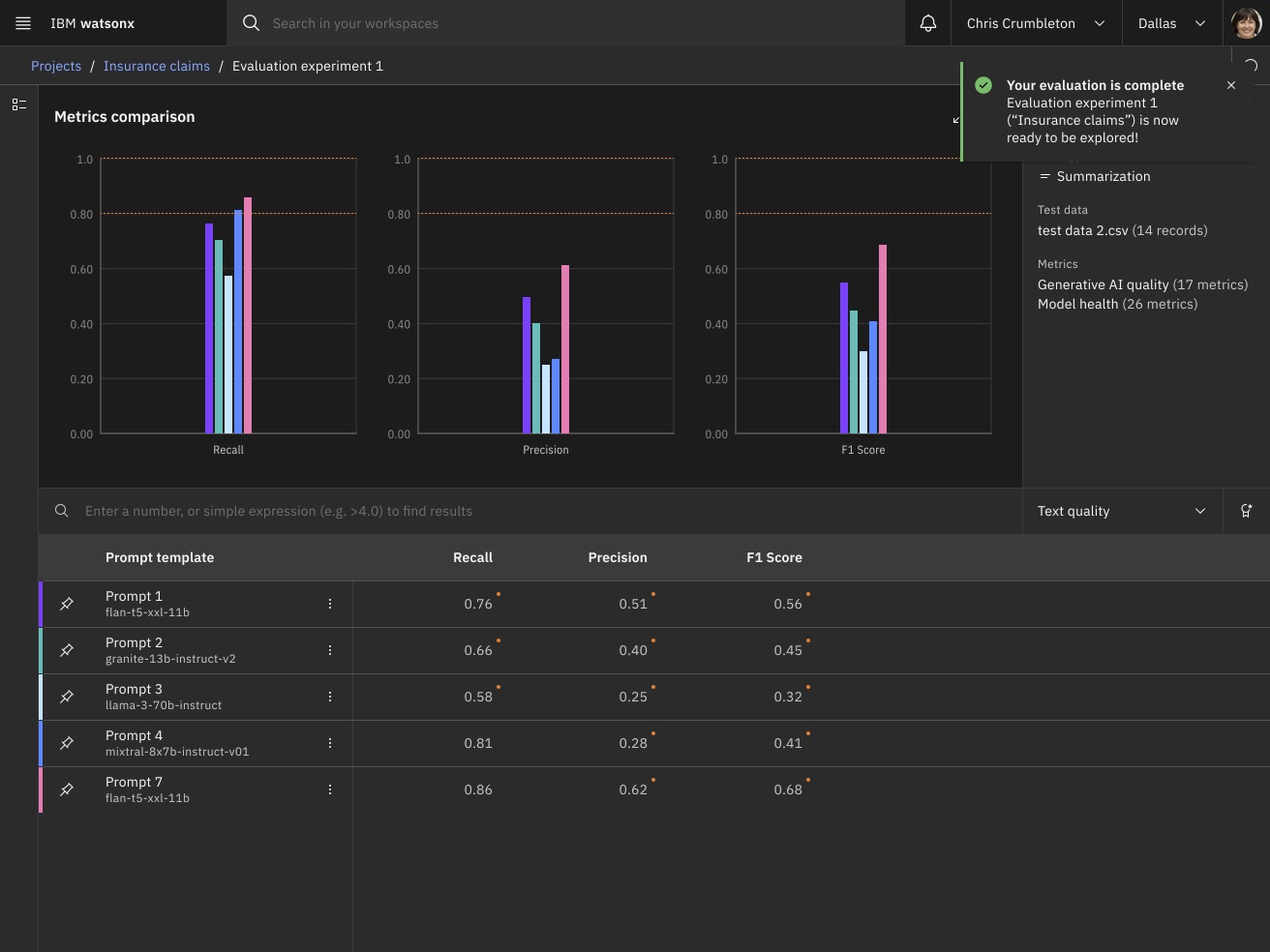

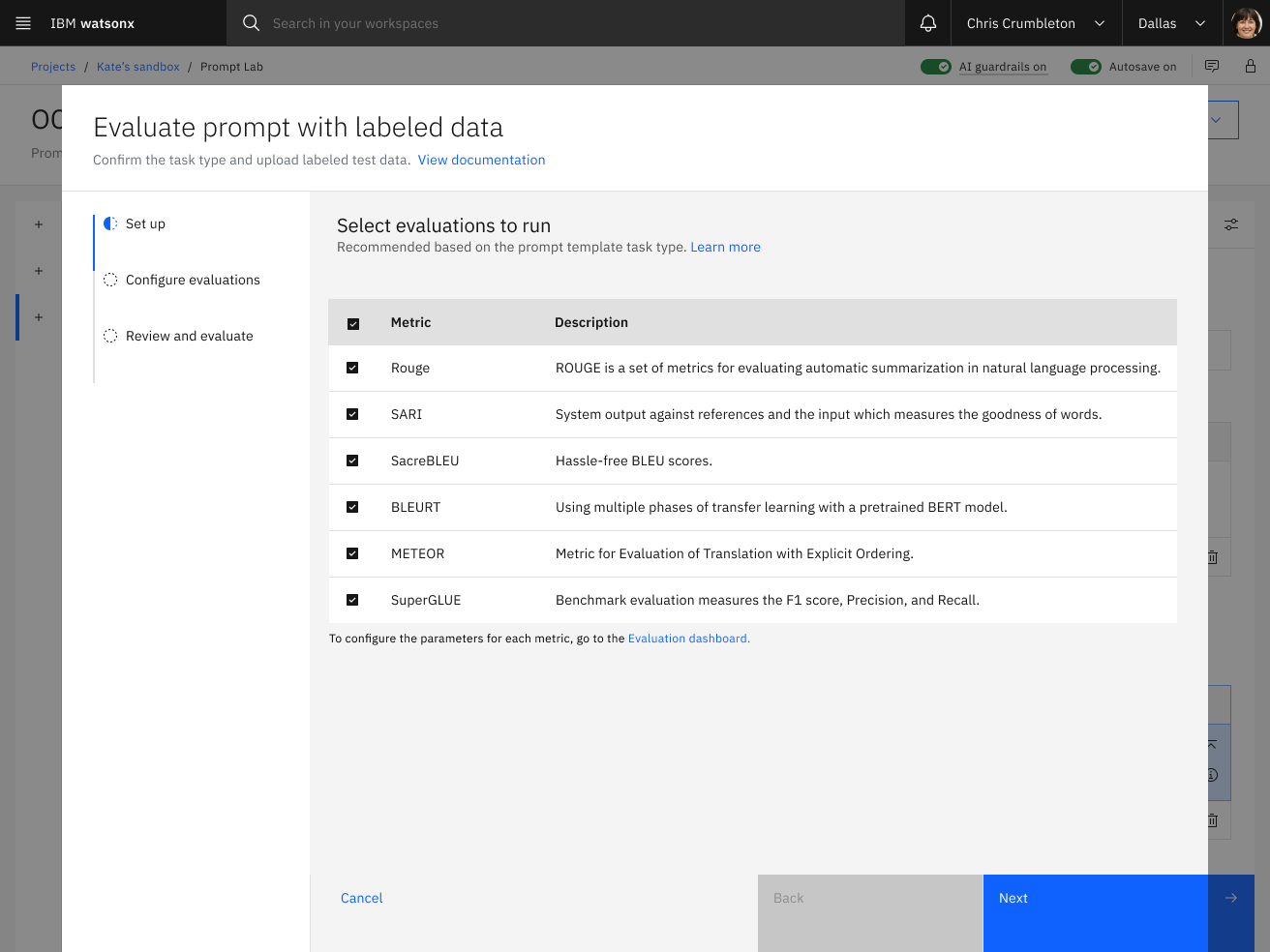

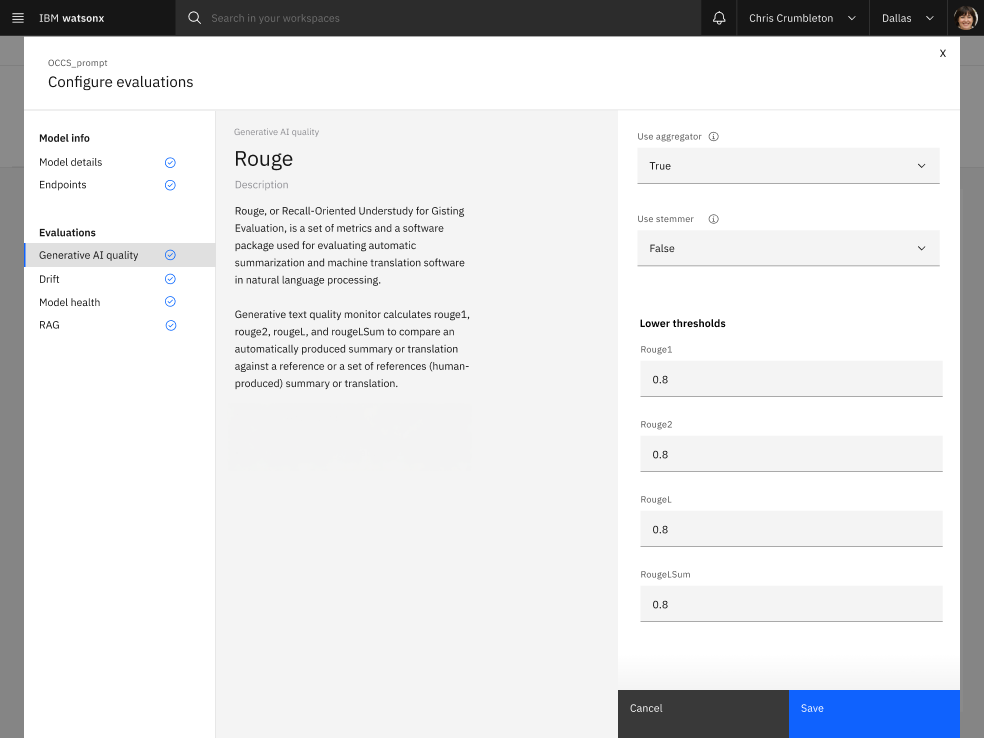

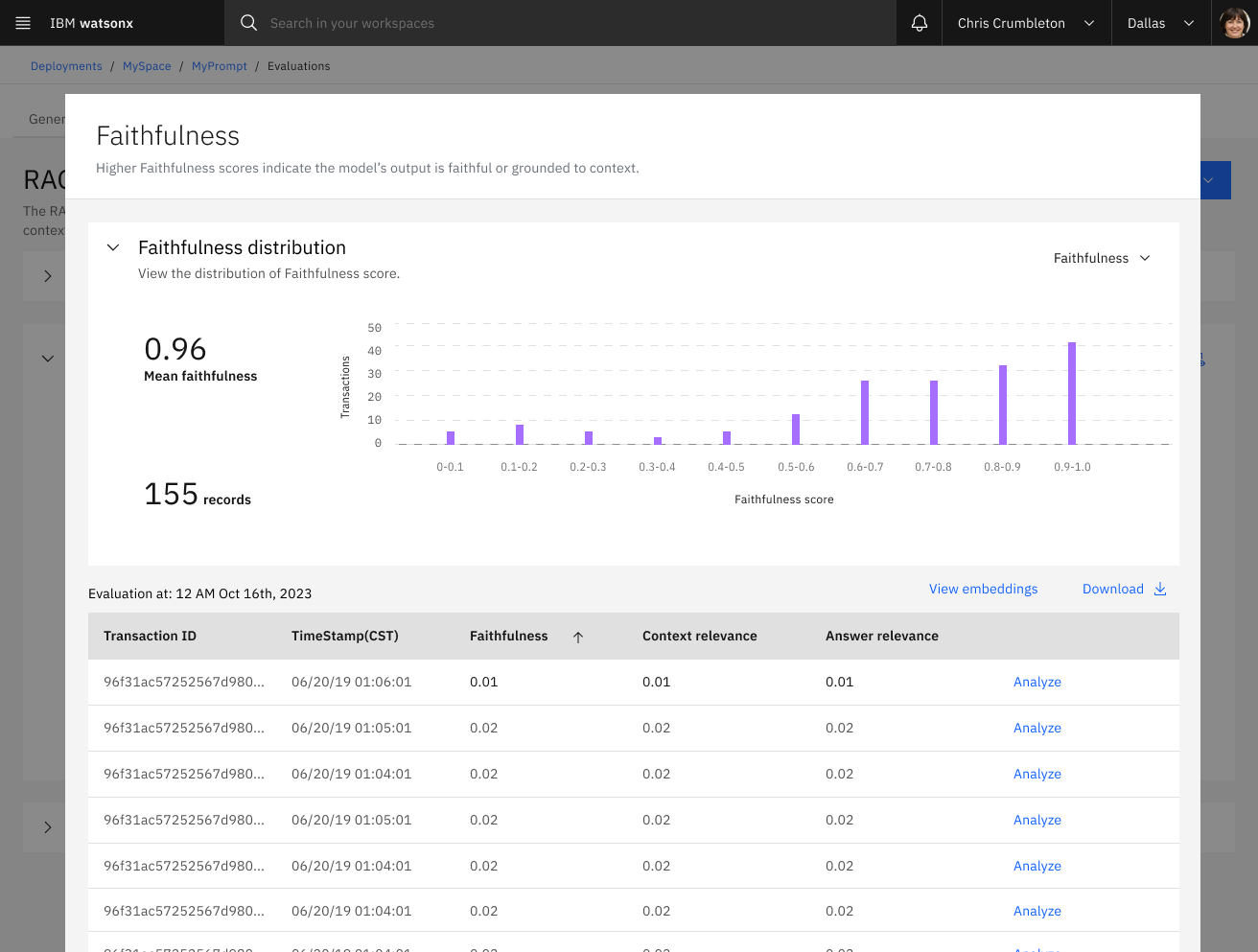

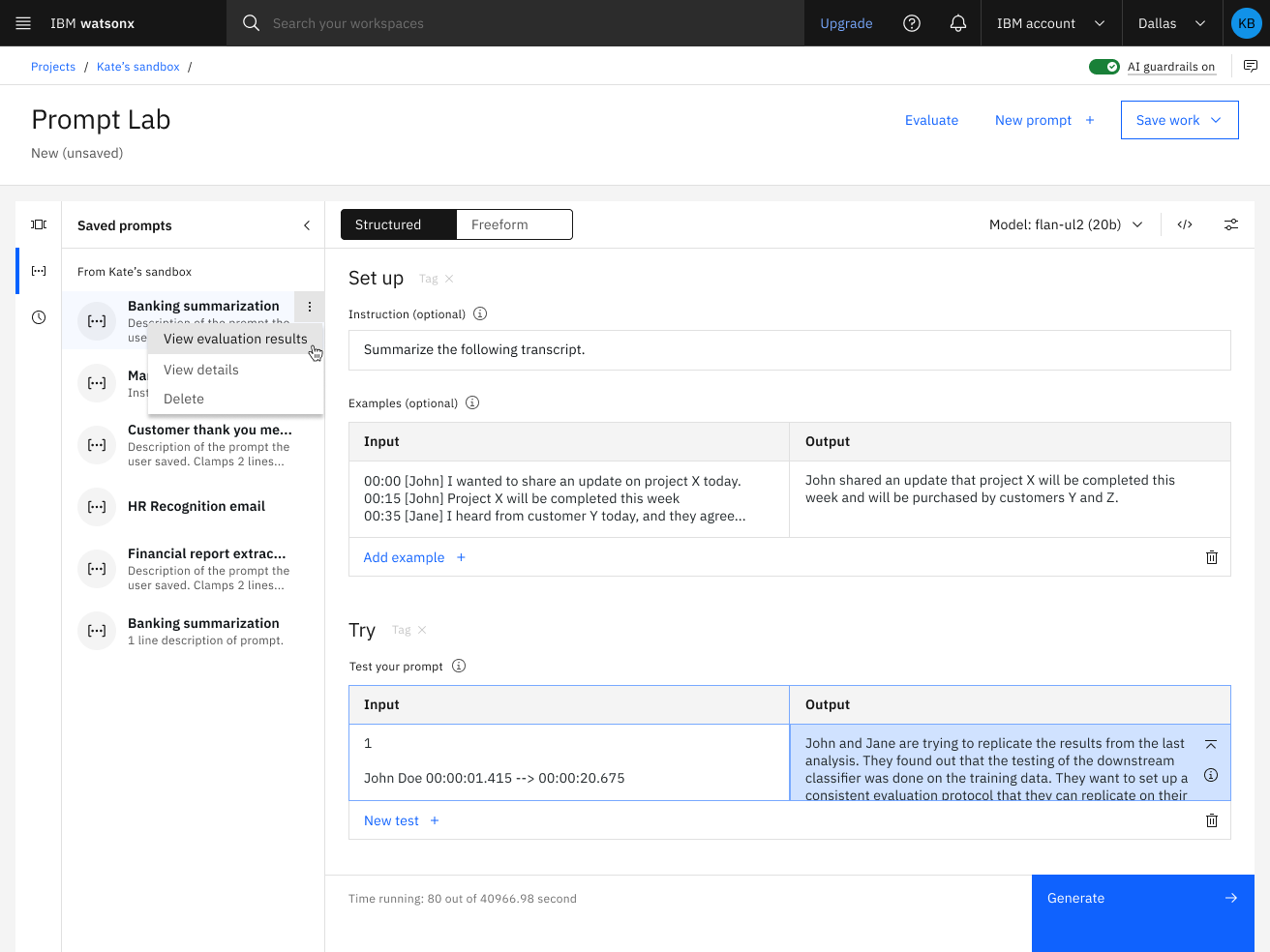

What sets me apart is this technical empathy: I've been where developers struggle with integration challenges, which informs every design decision I make today. Whether I'm simplifying AI governance platform onboarding or creating contextual guidance for generative AI evaluations, I draw on that deep systems knowledge to anticipate user needs and remove friction before it happens.

I create content strategies that don't just inform but accelerate adoption, reduce support burden, and achieve measurable business outcomes through operational efficiency.

Education

- M.S. Technical Communication - North Carolina State University

- B.A. Mass Communications - Savannah State University

Certifications

- Security+ (CompTIA)

- Certified Information Privacy Professional (IAPP)